How To A/B Test AI SDR Personalization

How To A/B Test AI SDR Personalization

A/B testing AI SDR personalization helps you find what works best to improve open rates, replies, and conversions. By comparing two outreach strategies on a segmented prospect list, you can use data - not guesswork - to refine your messaging. Here’s the process in a nutshell:

Segment your audience: Group prospects by company size, job role, or behavior for accurate comparisons.

Test one variable at a time: Focus on elements like subject lines, opening lines, CTAs, or timing.

Set measurable goals: Track metrics like open rates (20–25%), response rates (2–5%), and meeting bookings.

Run fair tests: Ensure random assignment, consistent timing, and adequate sample sizes for reliable data.

Analyze results: Look for statistically valid improvements and connect findings to business outcomes.

The key is to use these insights to scale effective strategies. Tools like AI SDR Shop can help you find advanced solutions to optimize and automate your personalization efforts across larger audiences.

This is how you can easily A/B split test your AI written cold emails within Clay!

::: @iframe https://www.youtube.com/embed/XPOF5RwrEsQ :::

Setting Up Your A/B Test: Requirements and Preparation

The foundation of any successful A/B test lies in having quality data, clearly defined audience segments, and specific objectives. These elements ensure your results are reliable and actionable.

When it comes to data, the more detailed, the better. Comprehensive prospect information - like company details, job roles, recent news, and behavioral data - enables a deeper level of personalization, which is key to impactful testing.

Finding and Segmenting Your Target Audience

For accurate results, you need well-defined audience segments where members share similar traits. Focus on factors like company size, industry, job function, or their stage in the buying journey.

Company-based segmentation: This is ideal for tailoring industry-specific messages. Group prospects by company size or industry to address their particular challenges effectively.

Role-based segmentation: If your goal is to test messaging for different job functions, segment by roles to ensure your content speaks directly to their priorities.

Behavioral segmentation: This approach uses actions, like downloading content or visiting your site, to form test groups. Align messages with where prospects are in their buying process.

Aim for 100–200 contacts in each segment to ensure your results are statistically meaningful.

Selecting Personalization Variables to Test

To understand what truly impacts your metrics, focus on testing one variable at a time. While AI-powered SDRs can personalize numerous elements, prioritize those most likely to move the needle.

Subject line personalization: This often has the greatest influence on open rates. Test different approaches, like inserting the company name versus addressing industry pain points. Keep subject lines under 50 characters to avoid truncation on mobile devices.

Opening line variations: The opening line sets the tone for your message. Test whether referencing a recent milestone outperforms mentioning a shared connection in driving engagement.

Call-to-action (CTA) personalization: CTAs can significantly impact response rates. Compare specific asks, like "Schedule a 15-minute call about [challenge]", with more generic requests.

Timing and frequency: Experiment with when and how often you reach out. Test variables like sending emails on Tuesday mornings versus Thursday afternoons, or using a three-email sequence versus five.

Defining Goals and Success Metrics

Your goals and metrics should align with your sales objectives and be measurable within the test period. Different stages of your sales funnel will call for different metrics, so choose those that reflect your current priorities.

Open rates: These measure how well your subject line is performing. For cold outreach, aim for open rates in the 20–25% range.

Response rates: This metric shows whether your message resonates with prospects. Typical response rates for cold outreach hover around 2–5%.

Meeting booking rates: Track how many responses lead to actual sales conversations. This metric connects engagement to tangible sales opportunities.

Pipeline contribution: This measures the broader business impact of your efforts, including the number of contacts and deal sizes added to your pipeline.

Set clear, measurable targets based on historical data. For example, aim to increase your response rate from 3% to 4.5%. Use recent campaign data to document your baseline performance for each metric. This baseline will serve as your control group benchmark, helping you determine whether your test results show genuine improvements or just normal fluctuations.

Once your objectives and metrics are in place, you’re ready to create and execute your test groups to validate your hypotheses.

Creating and Running Your A/B Test

Once you've laid the groundwork, the success of your A/B test hinges on how well you execute it. With your audience segments defined and clear metrics established, it's time to move forward with structuring and running your test.

Creating Clear Hypotheses

Every A/B test starts with a solid hypothesis - a clear, testable statement about what you expect to happen. This should tie directly to the goals you’ve already outlined. Your hypothesis should clearly state what you're testing, what outcome you predict, and the reasoning behind it.

A strong hypothesis might look like this: "If I change [specific variable], then [target metric] will improve because [reasoning based on user behavior or psychology]."

For instance, instead of vaguely stating, "Personalized subject lines perform better", try: "If I include the prospect's company name in the subject line, then open rates will increase because recipients are more likely to notice emails that feel tailored to their organization."

Another example could be: "If I reference a recent funding round in the opening line, then response rates will improve because prospects will view the sender as informed and genuinely interested in their business."

Make sure to document each hypothesis. This keeps your expectations consistent throughout the test and helps you understand why certain approaches succeed or fall short.

Setting Up Test Groups and Maintaining Consistency

Random assignment is key to ensuring unbiased results. Use your AI SDR platform to distribute prospects evenly between your control group (current approach) and test group (new personalization). Avoid sorting by variables like company size, industry, or lead quality to keep the test fair.

For larger audiences, a 50/50 split is ideal. If you're working with smaller groups, a 70/30 split might be more practical, with the larger group using the approach you’re more confident in.

Consistency is critical. Both groups should receive emails at the same time, use identical sending domains, and follow the same sequence schedule. The only difference between the two groups should be the specific variable you're testing. Tone, writing style, and all other factors must remain the same.

Also, keep an eye on external factors, such as industry news or events, that could impact response rates across both groups. Once your groups are set and variables controlled, you can focus on gathering meaningful data.

Running Tests for Statistical Significance

The length of your test will depend on your email volume and typical response patterns. In most B2B scenarios, two to three weeks is enough to account for variations in response times.

Stop the test only when you’ve reached statistical significance. Many AI SDR platforms include built-in tools to calculate this, often alerting you when results reach a 95% confidence level.

Avoid the temptation to check results too often or end the test early just because one approach seems to be leading after a few days. Early trends can be misleading and may not reflect the full picture.

Timing is also important. Avoid running tests during periods that could disrupt normal behavior, like major holidays or industry-wide events.

Finally, document everything - technical glitches, updates to your prospect list, and any external events that might have influenced the test. These records will be invaluable when analyzing results and planning your next steps to refine your personalization strategies.

sbb-itb-4c49dbd

Measuring and Analyzing Test Results

Once your test is complete and you've gathered data, the real challenge begins. Raw numbers alone don't paint the full picture - you need to dig into the trends and patterns to truly understand the impact.

Comparing Key Performance Metrics

Start by comparing the metrics that matter most. Open rates often serve as the first sign of success. Even a small increase - say, 5% - can mean hundreds of additional prospects engaging with your message.

But open rates are just the beginning. Reply rates offer a deeper look at engagement quality. For example, if a personalized approach raises replies from 3.2% to 4.8%, that's a 50% jump in engagement. However, not all replies are equal. It's crucial to separate positive responses from out-of-office replies or unsubscribe requests to get a clearer picture.

Then there’s the metric that impacts your bottom line the most: conversion rates. This shows how many prospects move from initial contact to becoming qualified leads. For instance, if your test group produces 12 qualified leads compared to 8 from the control group, that's a tangible improvement in business outcomes.

Another useful metric is time to response. Personalized outreach often prompts quicker replies because recipients feel the message speaks directly to their needs. By tracking whether responses come within 24 hours or days later, you can uncover valuable insights about timing.

Lastly, keep an eye on unsubscribe rates and spam complaints. These can signal if your approach is turning off prospects, helping you identify potential issues early.

These metrics provide a foundation for deeper statistical analysis.

Applying Statistical Analysis for Insights

To separate meaningful improvements from random chance, statistical analysis is essential. Use a 95% confidence level and aim for a p-value below 0.05 to validate your findings. Ensure each group in your test has at least 100 participants for reliable results, and review confidence intervals to understand the range of possible outcomes.

However, don't stop at statistical significance. Consider practical significance too. For example, a statistically significant 0.5% boost in reply rates might not justify the extra time and effort required for a highly personalized approach. Balance the numbers with the resources involved to make smarter decisions.

This analytical step is key to understanding how your efforts translate into measurable business impact.

Understanding Results for Business Impact

Once you've reviewed your metrics and validated them statistically, the next step is to connect these findings to real-world business outcomes. For example, even a modest 2% improvement in reply rates can significantly increase your pipeline value when scaled across your entire prospect base.

You should also evaluate changes in cost per acquisition. If more personalized outreach adds 15 minutes per prospect but reduces your cost per qualified lead from $180 to $140, the return on investment becomes clear.

Think about scalability too. A personalized strategy that works well for 200 prospects may become unsustainable for 2,000 unless you introduce automation or expand your team.

Pay attention to segment-specific performance as well. A personalized approach might resonate strongly with mid-market companies but have little effect on enterprise-level prospects. This insight can help you tailor your strategy for different audience segments.

Finally, look beyond immediate results and consider long-term engagement patterns. Prospects who respond positively to personalized outreach might be more likely to engage in follow-up campaigns or attend product demos, creating ongoing value.

Be mindful of external factors or events that could have influenced your results, and don’t overlook qualitative feedback. Documenting insights from prospects and your sales team can reveal nuances that metrics alone might miss, helping you refine your strategy for future campaigns.

Improving and Scaling Personalization Strategies

Once you've gathered insights from your tests, the next step is to focus on scaling and fine-tuning your personalization strategies. This is where you determine whether your A/B testing efforts lead to lasting growth or remain isolated wins.

Rolling Out Winning Strategies

Start by documenting your successful personalization tactics in a clear playbook. This should include key elements like messaging frameworks, timing strategies, and the specific tactics that delivered the best results.

Roll out these strategies in phases. Begin with high-value audience segments and expand gradually, ensuring consistent outcomes before scaling further. Keep in mind that approaches that work well for smaller groups may need additional resources or automation to handle larger audiences effectively.

Pay attention to external factors as you scale. Strategies that perform well in controlled tests may lose some of their impact when applied broadly due to issues like message fatigue or seasonal shifts in buyer behavior. A phased approach helps ensure that the gains from your testing translate into measurable, real-world results.

Planning Continuous Testing

Personalization isn’t a one-and-done effort - it requires ongoing adjustments to keep up with changing buyer preferences, market trends, and competitive dynamics.

Plan to run a new personalization test every 4–6 weeks to keep your strategies aligned with seasonal changes and evolving business needs. Each new test should build on the lessons learned from previous ones, uncovering fresh opportunities to refine your approach.

Establish a feedback loop between your testing insights and your overall sales strategy. Sharing these findings with your sales and marketing teams ensures that your personalization efforts stay aligned with broader company goals and customer feedback from various channels. Regular testing helps you stay ahead of shifting buyer behaviors and market conditions.

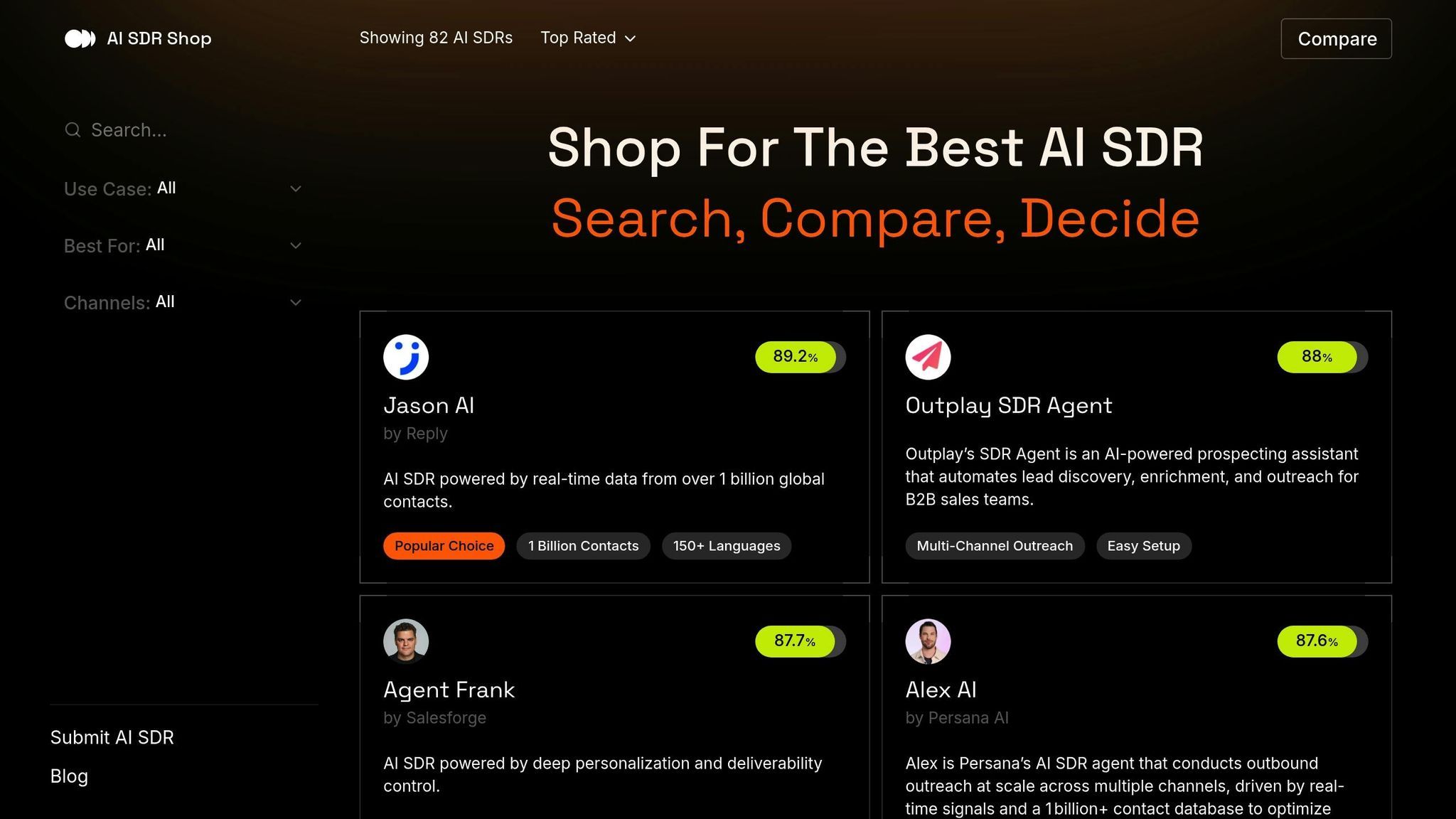

Using AI SDR Shop for Optimization

As your personalization efforts grow more sophisticated, tools like AI SDR Shop can help you streamline and optimize your strategies. If your current AI SDR solution isn’t meeting the demands of complex testing and personalization, it might be time to explore more advanced options.

AI SDR Shop offers a directory of over 80 AI SDR agents, making it easy to compare features and identify solutions that align with your needs. Look for capabilities like real-time data integration, detailed agent profiles, and multi-channel outreach - all critical for enhancing your personalization efforts.

When evaluating new AI SDR tools, prioritize seamless integration with your existing systems, such as your CRM and marketing automation platforms. This ensures that your new solution works smoothly with your current tech stack.

Use AI SDR Shop to explore and compare options without any cost. Review detailed profiles to find tools that match your tested strategies. With its focus on multi-channel outreach, AI SDR Shop can help you create a comprehensive engagement strategy that spans multiple platforms. By integrating an optimized AI SDR solution, you can build a scalable, data-driven personalization framework that delivers consistent results.

Key Takeaways and Next Steps

A/B testing your AI SDR personalization is all about creating a reliable way to figure out what truly connects with your prospects. The insights you gain from these tests lay the groundwork for steady, predictable sales growth.

The most successful companies don’t treat personalization testing as a one-and-done task. Buyer preferences shift, market dynamics evolve, and competitors keep entering the scene. It’s an ongoing process that requires regular attention.

Start with a single, clear hypothesis about your audience’s preferences. Maybe you want to test personalized subject lines, experiment with message timing, or adjust the depth of your content. Run your tests for at least two weeks to gather enough data to draw meaningful conclusions. Then, use those findings to fine-tune your broader outreach efforts.

Focus on statistical significance rather than chasing quick wins. For example, a 15% improvement that holds steady across 1,000 prospects is far more valuable than a flashy 50% boost from just 50 interactions. Make sure your test runs long enough and includes a large enough sample to deliver reliable and scalable results. Identify the biggest gap in your current strategy, and let that guide your next test.

Next, figure out where your personalization efforts are falling short. If your messages are too generic, start by tailoring them to specific industries. If you’re already doing that, try testing different levels of research or tweaking the tone of your messaging.

As your testing becomes more advanced, you might realize your current AI SDR tool isn’t cutting it for complex personalization strategies. That’s where AI SDR Shop can help. They offer a free directory of over 80 AI SDR agents, making it easy to compare features like real-time data integration, multi-channel outreach, and advanced personalization capabilities.

The companies that consistently stay ahead of the competition are the ones that never stop testing and improving. Make A/B testing a core part of your sales strategy, and you’ll build a personalization approach that delivers measurable and lasting results.

FAQs

How can I make sure my A/B test results for AI SDR personalization are accurate and meaningful?

To get accurate and reliable results from your A/B tests for AI SDR personalization, aim for statistical significance - usually a 95% confidence level. This means your results are less likely to be random or coincidental. Ensure your sample size is big enough to generate dependable insights, and let the test run long enough to reveal meaningful patterns. Leverage AI tools to process and analyze large datasets efficiently, but resist the urge to draw conclusions too early. Keep an eye on the test's progress, and when clear trends become apparent, tweak your strategy accordingly. Effective A/B testing allows you to refine your personalization approach, leading to improved sales performance.

What mistakes should I avoid when running A/B tests for AI SDR personalization?

When conducting A/B tests for AI SDR personalization, there are several pitfalls you’ll want to sidestep to ensure your results are reliable and actionable:

Stopping tests too early: Cutting a test short before it reaches statistical significance can lead to misleading conclusions. Patience is key to gathering meaningful data.

Ignoring statistical power: Running tests with insufficient data can prevent you from detecting real differences between variations, leaving you with inconclusive results.

Overusing winning variations: Leaning too heavily on a single successful strategy might work in the short term, but it can backfire as customer preferences shift over time.

Neglecting proper test monitoring: If you’re not actively tracking performance throughout the test, you risk overlooking key insights or failing to catch errors as they arise.

Misinterpreting results: Missteps like relying on one-tailed tests or ignoring the impact of multiple comparisons can distort your findings and lead to poor decisions. To get the most out of your A/B tests, focus on careful preparation, consistent monitoring, and rigorous statistical analysis. This approach will help you refine your AI SDR strategies with confidence.

How can I identify which personalization factors will most improve my sales metrics during an A/B test?

To figure out which personalization factors will most influence your sales metrics in an A/B test, start by zeroing in on elements that directly shape customer engagement. Think about things like the tone of your messaging, the phrasing of subject lines, or how your call-to-action is worded. By testing one variable at a time, you’ll be able to clearly see what’s making a difference. Let your sales goals guide the metrics you track - whether it’s conversion rates, response rates, or average order value. Digging into past sales and marketing data can also give you clues about which factors are likely to move the needle the most. This helps you prioritize tests that matter. Keep your experiments straightforward, measurable, and in sync with your overall sales strategy to get the best insights.